Spark Streaming, Kinesis, and EMR Pain Points by Chris Clouten disneystreaming

IoT with Amazon Kinesis and Spark Streaming on Qubole

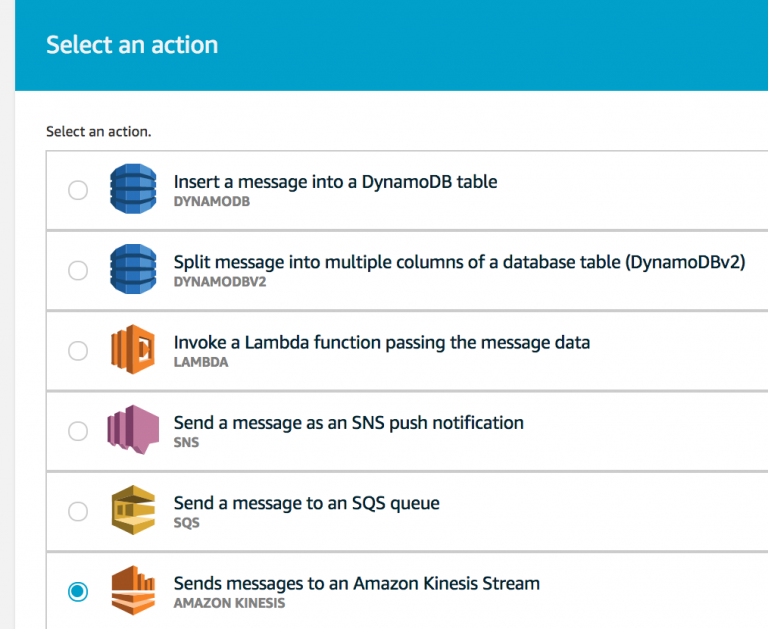

For more information, see Example: Read From a Kinesis Stream in a Different Account. AWS Glue streaming ETL jobs can auto-detect compressed data, transparently decompress the streaming data, perform the usual transformations on the input source, and load to the output store.. Choose Spark streaming.

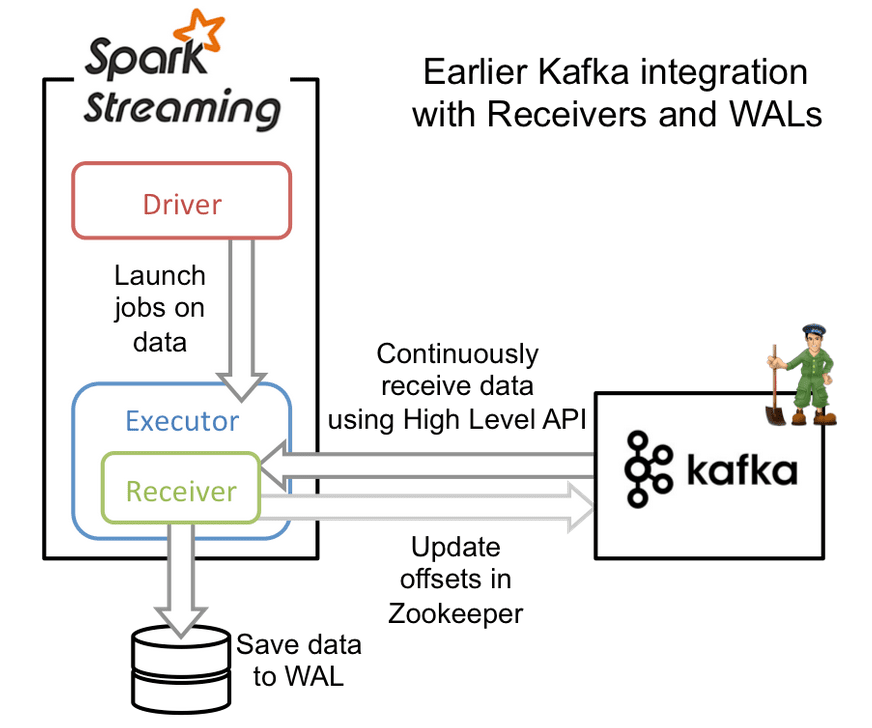

Improvements to Kafka integration of Spark Streaming Databricks Blog

I modified this example and used my own values for "app-name", "stream-name" and "endpoint-url". I have placed various print lines within my code. When running the job using the cmd "spark-submit" I fail to see any print lines in the stdout logs. Can someone please explain to me where I can find the system out print lines.

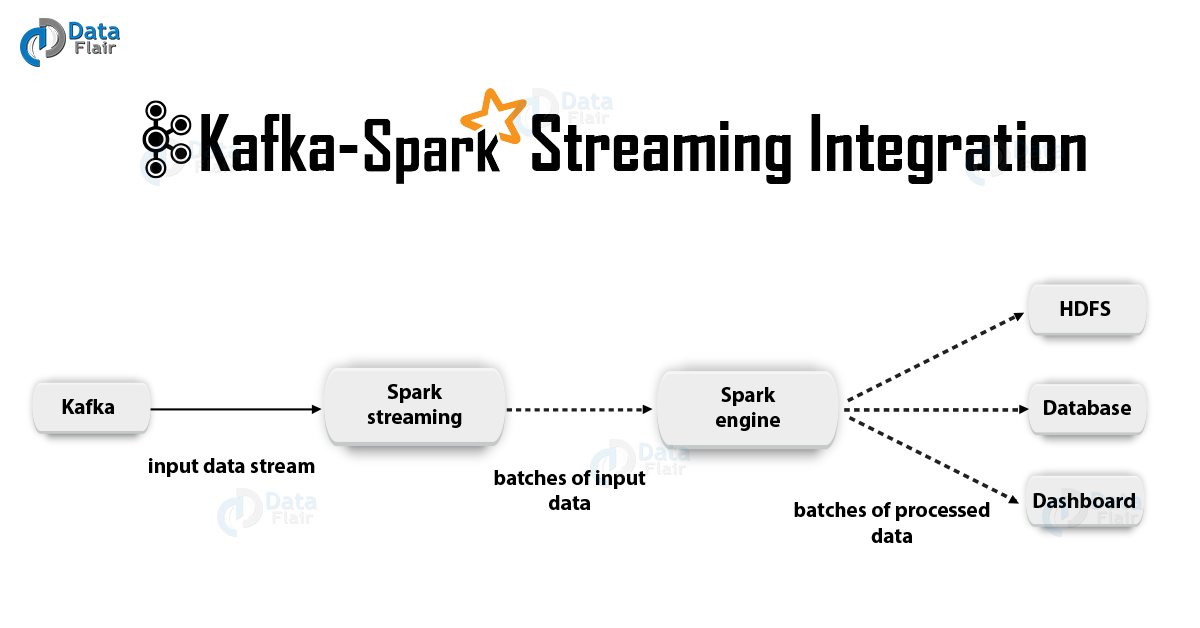

Apache Kafka + Spark Streaming Integration DataFlair

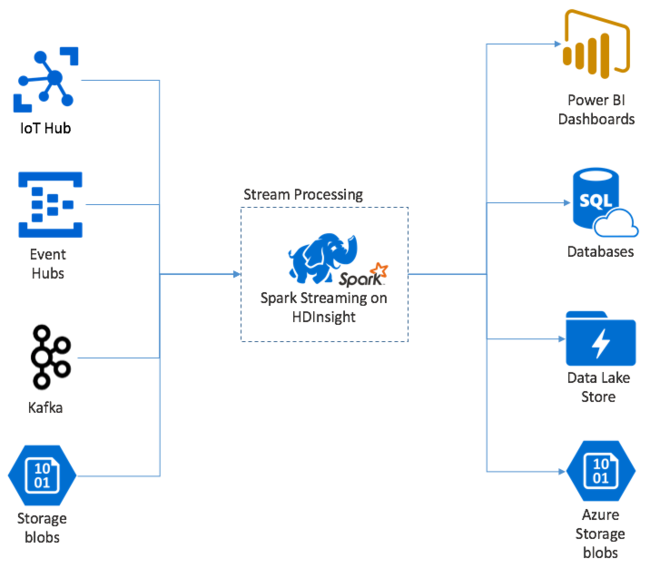

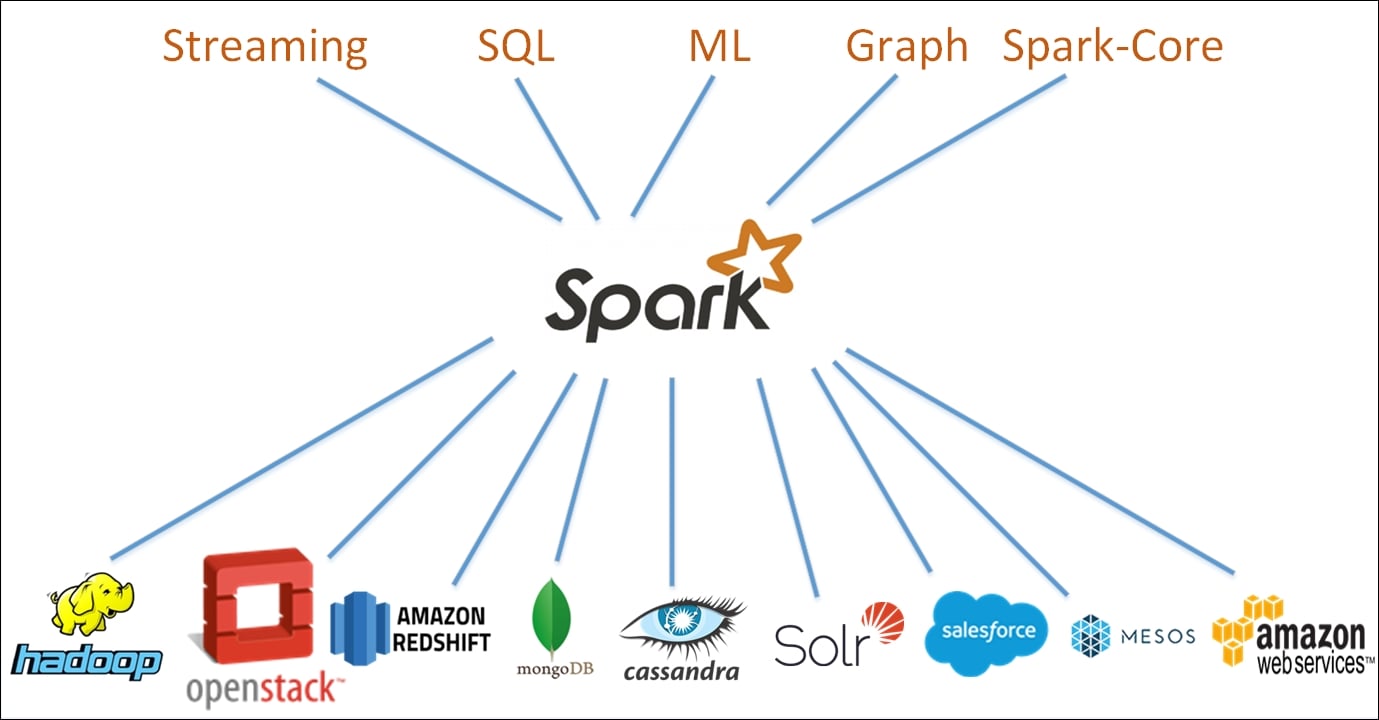

Apache Spark Streaming is a scalable, high-throughput, fault-tolerant streaming processing system that supports both batch and streaming workloads. It is an extension of the core Spark API to process real-time data from sources like Kafka, Flume, and Amazon Kinesis to name a few.

Streaming twitter analysis Spark & Kinesis Towards Data Science

Spark Streaming + Kinesis Integration Amazon Kinesis is a fully managed service for real-time processing of streaming data at massive scale. The Kinesis receiver creates an input DStream using the Kinesis Client Library (KCL) provided by Amazon under the Amazon Software License (ASL).

Processing Kinesis Data Streams with Spark Streaming

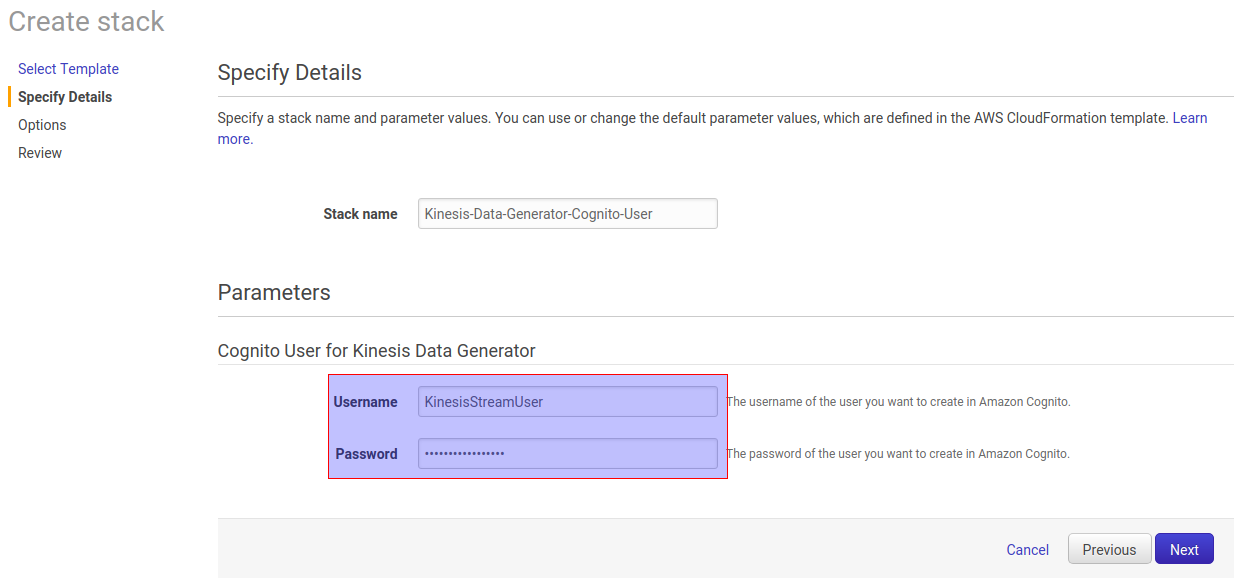

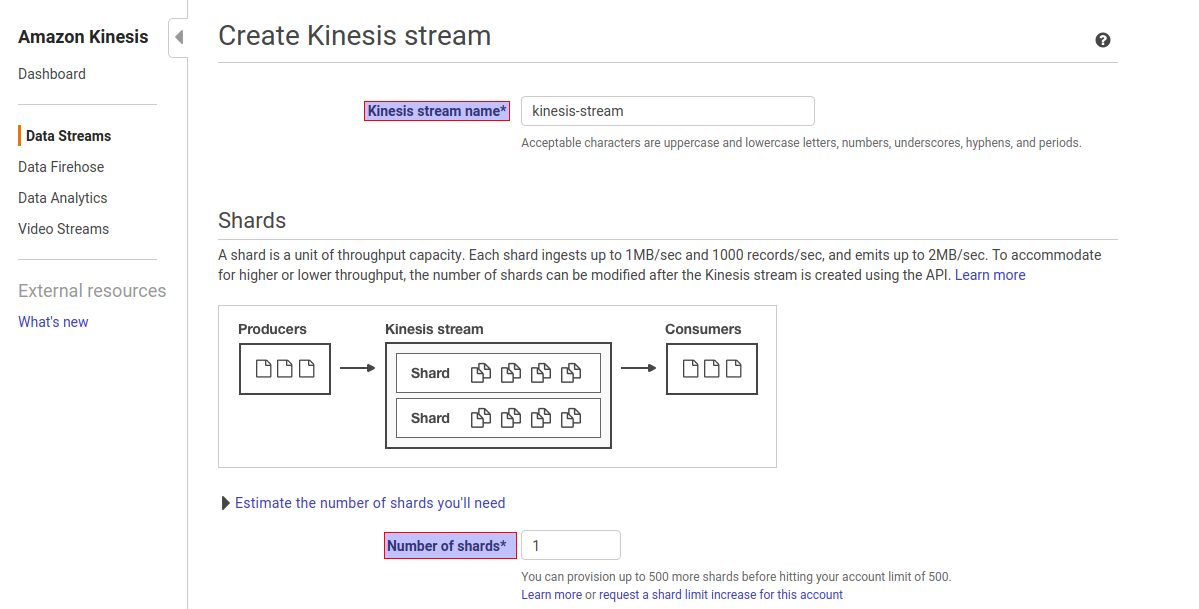

Step1. Go to Amazon Kinesis console -> click on Create Data Stream Step2. Give Kinesis Stream Name and Number of shards as per volume of the incoming data. In this case, Kinesis stream name as kinesis-stream and number of shards are 1. Shards in Kinesis Data Streams A shard is a uniquely identified sequence of data records in a stream.

O que é o Spark Streaming e o que ele oferece? Alura

Spark Streaming is an extension of the core Spark framework that enables scalable, high-throughput, fault-tolerant stream processing of data streams such as Amazon Kinesis Streams. Spark Streaming provides a high-level abstraction called a Discretized Stream or DStream, which represents a continuous sequence of RDDs.

Spark Streaming Different Output modes explained Spark By {Examples}

Apache Spark version 2.0 introduced the first version of the Structured Streaming API which enables developers to create end-to-end fault tolerant streaming jobs. Although the Structured.

Optimize SparkStreaming to Efficiently Process Amazon Kinesis Streams AWS Big Data Blog

Spark Structured Stream - Kinesis as Data Source Ask Question Asked 1 year, 9 months ago Modified 7 months ago Viewed 860 times Part of AWS Collective 4 I am trying to consume kinesis data stream records using psypark structured stream. I am trying to run this code in aws glue batch job.

Spark Streaming in Azure HDInsight Microsoft Learn

Here we explain how to configure Spark Streaming to receive data from Kinesis. Configuring Kinesis A Kinesis stream can be set up at one of the valid Kinesis endpoints with 1 or more shards per the following guide. Configuring Spark Streaming Application

An Introduction to Spark Streaming by Harshit Agarwal Medium

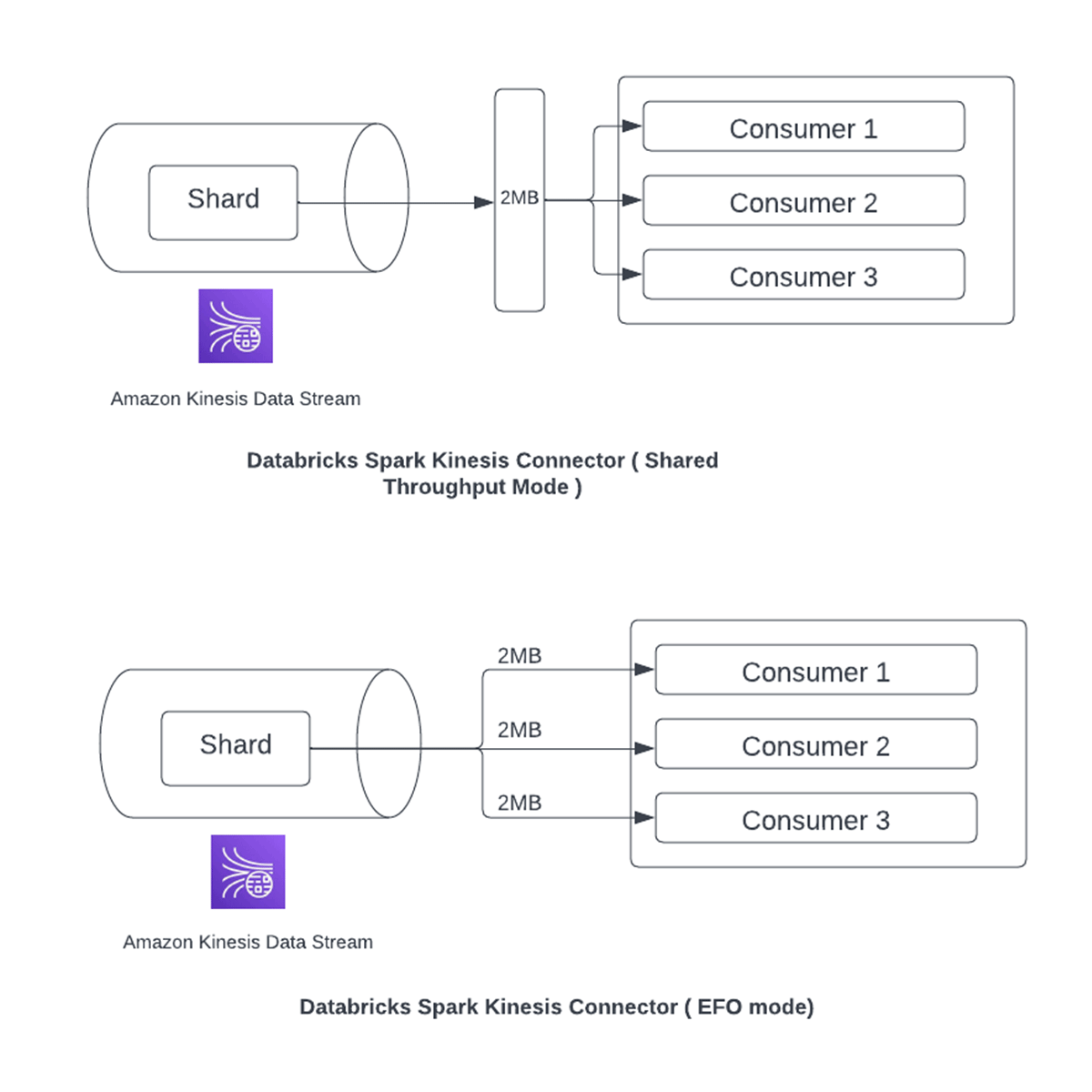

This article describes best practices when using Kinesis as a streaming source with Delta Lake and Apache Spark Structured Streaming. Amazon Kinesis Data Streams (KDS) is a massively scalable and durable real-time data streaming service. KDS continuously captures gigabytes of data per second from hundreds of thousands of sources such as website.

Spark Streaming with Kafka Example Spark By {Examples}

PDF RSS Apache Spark is a unified analytics engine for large-scale data processing. It provides high-level APIs in Java, Scala, Python and R, and an optimized engine that supports general execution graphs. For more information on consuming Kinesis Data Streams using Spark Streaming, see Spark Streaming + Kinesis Integration.

What is Spark Streaming? The Ultimate Guide [Updated]

Here we explain how to configure Spark Streaming to receive data from Kinesis. Configuring Kinesis A Kinesis stream can be set up at one of the valid Kinesis endpoints with 1 or more shards per the following guide. Configuring Spark Streaming Application

Processing Kinesis Data Streams with Spark Streaming

This Spark Streaming with Kinesis tutorial intends to help you become better at integrating the two. In this tutorial, we'll examine some custom Spark Kinesis code and also show a screencast of running it. In addition, we're going to cover running, configuring, sending sample data and AWS setup.

Spark Streaming, Kinesis, and EMR Pain Points by Chris Clouten disneystreaming

We will do the following steps: create a Kinesis stream in AWS using boto3 write some simple JSON messages into the stream consume the messages in PySpark display the messages in the console TL;DR: Github code repo Step 1: Setup PySpark for Jupyter In order to be able to run PySpark in the notebook, we have to use the findspark package.

Simplify Streaming Infrastructure With Enhanced FanOut Support for Kinesis Data Streams in

Spark Structured Streaming is a high-level API built on Apache Spark that simplifies the development of scalable, fault-tolerant, and real-time data processing applications. By seamlessly.

GitHub snowplow/sparkstreamingexampleproject A Spark Streaming job reading events from

Apache Spark's Structured Streaming with Amazon Kinesis on Databricks by Jules Damji August 9, 2017 in Company Blog Share this post On July 11, 2017, we announced the general availability of Apache Spark 2.2.0 as part of Databricks Runtime 3.0 (DBR) for the Unified Analytics Platform.